I would like to share a few notes about the kubectl plugins I use, installed via krew. This is not intended to be a comprehensive description of these plugins; instead, I prefer to focus on examples and screenshots.

Links:

Requirements

Install krew

Install Krew, the plugin manager for the kubectl command-line tool:

1

2

3

4

5

6

| TMP_DIR="${TMP_DIR:-${PWD}}"

ARCH="amd64"

curl -sL "https://github.com/kubernetes-sigs/krew/releases/download/v0.4.5/krew-linux_${ARCH}.tar.gz" | tar -xvzf - -C "${TMP_DIR}" --no-same-owner --strip-components=1 --wildcards "*/krew-linux*"

"${TMP_DIR}/krew-linux_${ARCH}" install krew

rm "${TMP_DIR}/krew-linux_${ARCH}"

export PATH="${HOME}/.krew/bin:${PATH}"

|

My Favorite krew + kubectl plugins

Here is a list of my favorite Krew and kubectl plugins:

- This kubectl add-on automates the management and issuance of TLS certificates. It allows for direct interaction with cert-manager resources, such as performing manual renewal of Certificate resources.

Installation of the cert-manager Krew plugin:

1

| kubectl krew install cert-manager

|

Get details about the current status of a cert-manager Certificate resource, including information on related resources like CertificateRequest or Order:

1

| kubectl cert-manager status certificate --namespace cert-manager ingress-cert-staging

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

| Name: ingress-cert-staging

Namespace: cert-manager

Created at: 2023-06-18T07:31:46Z

Conditions:

Ready: True, Reason: Ready, Message: Certificate is up to date and has not expired

DNS Names:

- *.k01.k8s.mylabs.dev

- k01.k8s.mylabs.dev

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Issuing 41m cert-manager-certificates-trigger Issuing certificate as Secret does not exist

Normal Generated 41m cert-manager-certificates-key-manager Stored new private key in temporary Secret resource "ingress-cert-staging-jbw7s"

Normal Requested 41m cert-manager-certificates-request-manager Created new CertificateRequest resource "ingress-cert-staging-r2mnb"

Normal Reused 37m cert-manager-certificates-key-manager Reusing private key stored in existing Secret resource "ingress-cert-staging"

Normal Requested 37m cert-manager-certificates-request-manager Created new CertificateRequest resource "ingress-cert-staging-jm8c2"

Normal Issuing 37m (x2 over 38m) cert-manager-certificates-issuing The certificate has been successfully issued

Issuer:

Name: letsencrypt-staging-dns

Kind: ClusterIssuer

Conditions:

Ready: True, Reason: ACMEAccountRegistered, Message: The ACME account was registered with the ACME server

Events: <none>

Secret:

Name: ingress-cert-staging

Issuer Country: US

Issuer Organisation: (STAGING) Let's Encrypt

Issuer Common Name: (STAGING) Artificial Apricot R3

Key Usage: Digital Signature, Key Encipherment

Extended Key Usages: Server Authentication, Client Authentication

Public Key Algorithm: RSA

Signature Algorithm: SHA256-RSA

Subject Key ID: 6ad5d66e8d4e46409107d6af11283ef603f5113b

Authority Key ID: de727a48df31c3a650df9f8523df57374b5d2e65

Serial Number: fabb47cea28a80ce5add9eb5e02c5e7c8273

Events: <none>

Not Before: 2023-06-18T06:36:23Z

Not After: 2023-09-16T06:36:22Z

Renewal Time: 2023-08-17T06:36:22Z

No CertificateRequest found for this Certificate

|

Mark cert-manager Certificate resources for manual renewal:

1

2

3

| kubectl cert-manager renew --namespace cert-manager ingress-cert-staging

sleep 5

kubectl cert-manager inspect secret --namespace cert-manager ingress-cert-staging | grep -A2 -E 'Validity period'

|

1

2

3

4

5

| Manually triggered issuance of Certificate cert-manager/ingress-cert-staging

Validity period:

Not Before: Sun, 18 Jun 2023 07:15:58 UTC

Not After: Sat, 16 Sep 2023 07:15:57 UTC

|

The Certificate was created at 2023-06-18 06:36:23 and then rotated on 18 Jun 2023 07:15:58.

- Similar to

kubectl get all, but it retrieves truly all resources.

Installation of the get-all Krew plugin:

1

| kubectl krew install get-all

|

Get all resources from the default namespace:

1

| kubectl get-all -n default

|

1

2

3

4

5

6

| NAME NAMESPACE AGE

configmap/kube-root-ca.crt default 68m

endpoints/kubernetes default 69m

serviceaccount/default default 68m

service/kubernetes default 69m

endpointslice.discovery.k8s.io/kubernetes default 69m

|

- ice is an open-source tool that helps Kubernetes users monitor and optimize container resource usage.

Installation of the ice Krew plugin:

1

| kubectl krew install ice

|

List CPU information for containers within pods:

1

| kubectl ice cpu -n kube-prometheus-stack --sort used

|

1

2

3

4

5

6

7

8

9

10

11

12

| PODNAME CONTAINER USED REQUEST LIMIT %REQ %LIMIT

prometheus-kube-prometheus-stack-prometheus-0 config-reloader 0m 200m 200m - -

alertmanager-kube-prometheus-stack-alertmanager-0 alertmanager 1m 0m 0m - -

alertmanager-kube-prometheus-stack-alertmanager-0 config-reloader 1m 200m 200m 0.01 0.01

kube-prometheus-stack-grafana-896f8645-6q9lb grafana-sc-dashboard 1m - - - -

kube-prometheus-stack-grafana-896f8645-6q9lb grafana-sc-datasources 1m - - - -

kube-prometheus-stack-operator-7f45586f68-9rz6j kube-prometheus-stack 1m - - - -

kube-prometheus-stack-kube-state-metrics-669bd5c594-vfznb kube-state-metrics 2m - - - -

kube-prometheus-stack-prometheus-node-exporter-m4k5m node-exporter 2m - - - -

kube-prometheus-stack-prometheus-node-exporter-x5bhm node-exporter 2m - - - -

kube-prometheus-stack-grafana-896f8645-6q9lb grafana 8m - - - -

prometheus-kube-prometheus-stack-prometheus-0 prometheus 52m - - - -

|

List memory information for containers within pods:

1

| kubectl ice memory -n kube-prometheus-stack --node-tree

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

| NAMESPACE NAME USED REQUEST LIMIT %REQ %LIMIT

kube-prometheus-stack StatefulSet/alertmanager-kube-prometheus-stack-alertmanager 19.62Mi 250.00Mi 50.00Mi 0.04 0.01

kube-prometheus-stack └─Pod/alertmanager-kube-prometheus-stack-alertmanager-0 19.62Mi 250.00Mi 50.00Mi 0.04 0.01

kube-prometheus-stack └─Container/alertmanager 16.44Mi 200Mi 0 8.22 -

kube-prometheus-stack └─Container/config-reloader 3.18Mi 50Mi 50Mi 6.35 6.35

- Node/ip-192-168-26-84.ec2.internal 241.14Mi 0 0 - -

kube-prometheus-stack └─Deployment/kube-prometheus-stack-grafana 231.98Mi 0 0 - -

kube-prometheus-stack └─ReplicaSet/kube-prometheus-stack-grafana-896f8645 231.98Mi 0 0 - -

kube-prometheus-stack └─Pod/kube-prometheus-stack-grafana-896f8645-6q9lb 231.98Mi 0 0 - -

kube-prometheus-stack └─Container/grafana-sc-dashboard 70.99Mi - - - -

kube-prometheus-stack └─Container/grafana-sc-datasources 72.67Mi - - - -

kube-prometheus-stack └─Container/grafana 88.32Mi - - - -

kube-prometheus-stack └─DaemonSet/kube-prometheus-stack-prometheus-node-exporter 9.16Mi 0 0 - -

kube-prometheus-stack └─Pod/kube-prometheus-stack-prometheus-node-exporter-m4k5m 9.16Mi 0 0 - -

kube-prometheus-stack └─Container/node-exporter 9.16Mi - - - -

- Node/ip-192-168-7-23.ec2.internal 44.42Mi 0 0 - -

kube-prometheus-stack └─Deployment/kube-prometheus-stack-kube-state-metrics 12.68Mi 0 0 - -

kube-prometheus-stack └─ReplicaSet/kube-prometheus-stack-kube-state-metrics-669bd5c594 12.68Mi 0 0 - -

kube-prometheus-stack └─Pod/kube-prometheus-stack-kube-state-metrics-669bd5c594-vfznb 12.68Mi 0 0 - -

kube-prometheus-stack └─Container/kube-state-metrics 12.68Mi - - - -

kube-prometheus-stack └─Deployment/kube-prometheus-stack-operator 22.64Mi 0 0 - -

kube-prometheus-stack └─ReplicaSet/kube-prometheus-stack-operator-7f45586f68 22.64Mi 0 0 - -

kube-prometheus-stack └─Pod/kube-prometheus-stack-operator-7f45586f68-9rz6j 22.64Mi 0 0 - -

kube-prometheus-stack └─Container/kube-prometheus-stack 22.64Mi - - - -

kube-prometheus-stack └─DaemonSet/kube-prometheus-stack-prometheus-node-exporter 9.10Mi 0 0 - -

kube-prometheus-stack └─Pod/kube-prometheus-stack-prometheus-node-exporter-x5bhm 9.10Mi 0 0 - -

kube-prometheus-stack └─Container/node-exporter 9.11Mi - - - -

kube-prometheus-stack StatefulSet/prometheus-kube-prometheus-stack-prometheus 400.28Mi 50.00Mi 50.00Mi 0.80 0.80

kube-prometheus-stack └─Pod/prometheus-kube-prometheus-stack-prometheus-0 400.28Mi 50.00Mi 50.00Mi 0.80 0.80

kube-prometheus-stack └─Container/prometheus 393.89Mi - - - -

kube-prometheus-stack └─Container/config-reloader 6.38Mi 50Mi 50Mi 12.77 12.77

|

List image information for containers within pods:

1

| kubectl ice image -n cert-manager

|

1

2

3

4

| PODNAME CONTAINER PULL IMAGE TAG

cert-manager-777fbdc9f8-ng8dg cert-manager-controller IfNotPresent quay.io/jetstack/cert-manager-controller v1.12.2

cert-manager-cainjector-65857fccf8-krpr9 cert-manager-cainjector IfNotPresent quay.io/jetstack/cert-manager-cainjector v1.12.2

cert-manager-webhook-54f9d96756-plv84 cert-manager-webhook IfNotPresent quay.io/jetstack/cert-manager-webhook v1.12.2

|

List the status of individual containers within pods:

1

| kubectl ice status -n kube-prometheus-stack

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| PODNAME CONTAINER READY STARTED RESTARTS STATE REASON EXIT-CODE SIGNAL AGE

alertmanager-kube-prometheus-stack-alertmanager-0 init-config-reloader true - 0 Terminated Completed 0 0 100m

alertmanager-kube-prometheus-stack-alertmanager-0 alertmanager true true 0 Running - - - 100m

alertmanager-kube-prometheus-stack-alertmanager-0 config-reloader true true 0 Running - - - 100m

kube-prometheus-stack-grafana-896f8645-6q9lb download-dashboards true - 0 Terminated Completed 0 0 100m

kube-prometheus-stack-grafana-896f8645-6q9lb grafana true true 0 Running - - - 100m

kube-prometheus-stack-grafana-896f8645-6q9lb grafana-sc-dashboard true true 0 Running - - - 100m

kube-prometheus-stack-grafana-896f8645-6q9lb grafana-sc-datasources true true 0 Running - - - 100m

kube-prometheus-stack-kube-state-metrics-669bd5c594-vfznb kube-state-metrics true true 0 Running - - - 100m

kube-prometheus-stack-operator-7f45586f68-9rz6j kube-prometheus-stack true true 0 Running - - - 100m

kube-prometheus-stack-prometheus-node-exporter-m4k5m node-exporter true true 0 Running - - - 100m

kube-prometheus-stack-prometheus-node-exporter-x5bhm node-exporter true true 0 Running - - - 100m

prometheus-kube-prometheus-stack-prometheus-0 init-config-reloader true - 0 Terminated Completed 0 0 100m

prometheus-kube-prometheus-stack-prometheus-0 config-reloader true true 0 Running - - - 100m

prometheus-kube-prometheus-stack-prometheus-0 prometheus true true 0 Running - - - 100m

|

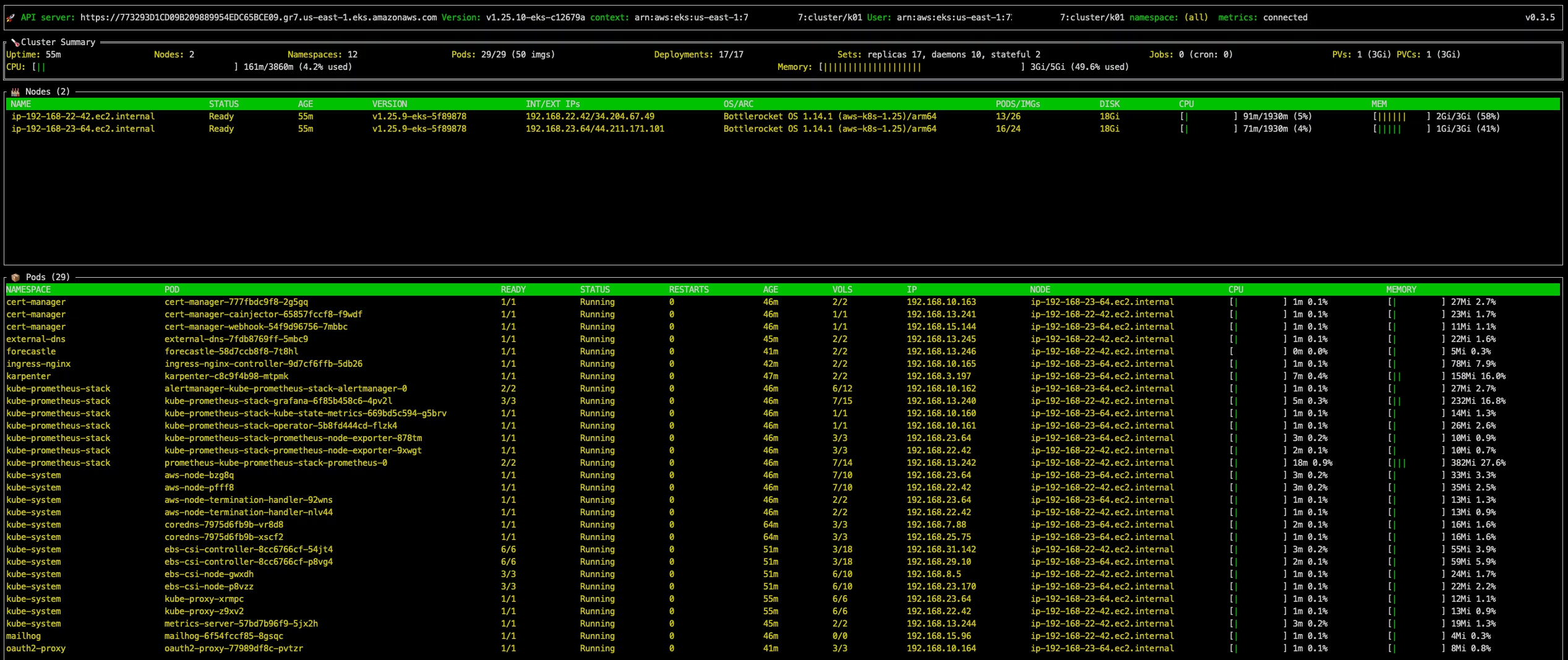

- A

top-like tool for your Kubernetes clusters.

Installation of the ktop Krew plugin:

1

| kubectl krew install ktop

|

Run ktop:

ktop screenshot

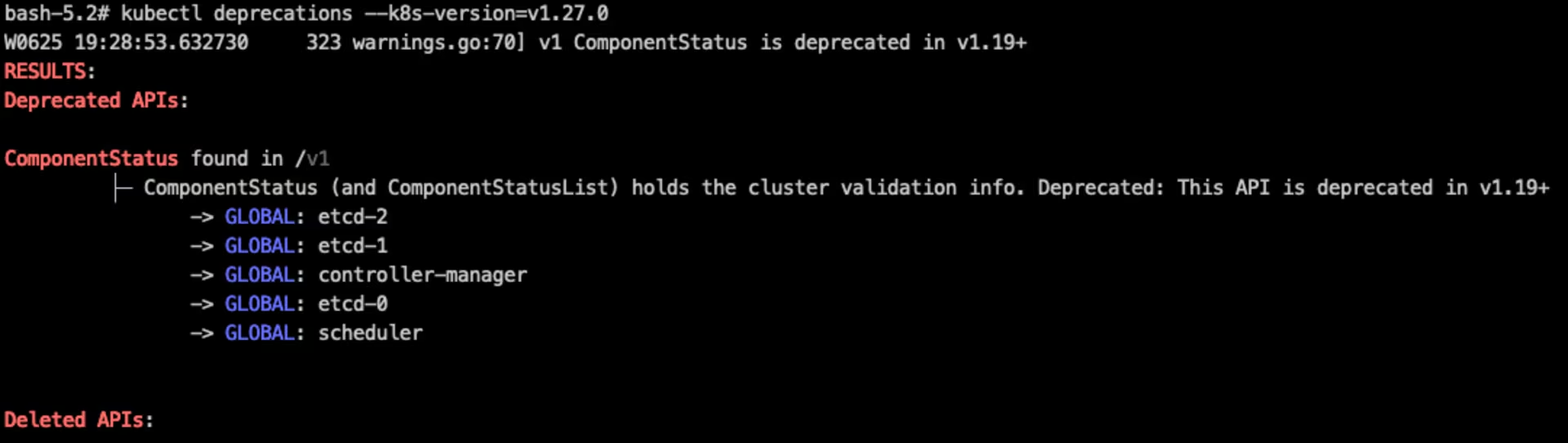

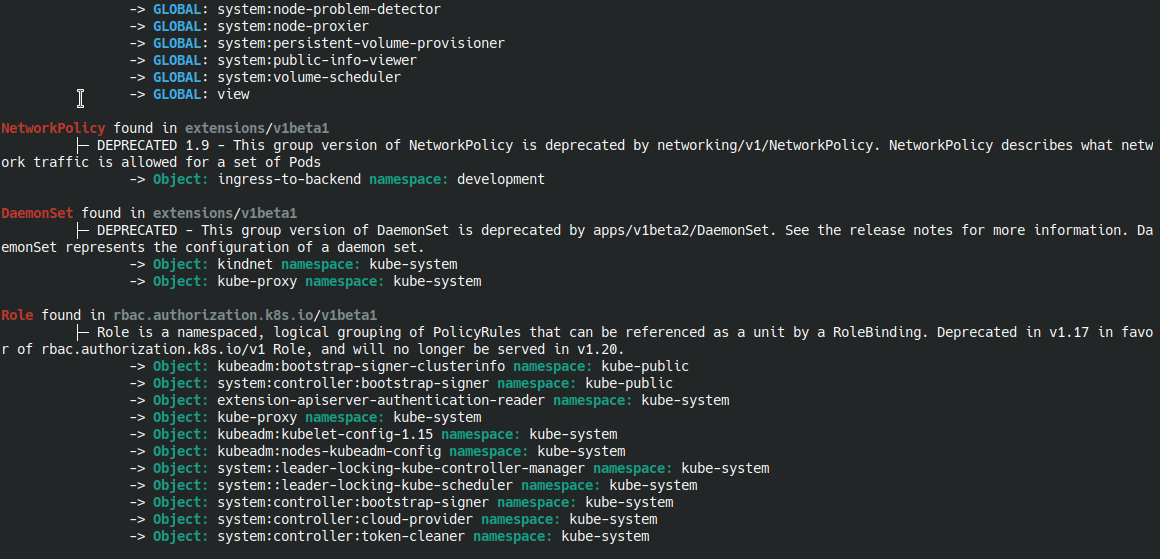

- A Kubernetes Pre-Upgrade Checker.

KubePug logo

Installation of the deprecations Krew plugin:

1

| kubectl krew install deprecations

|

Shows all deprecated objects in a Kubernetes cluster, allowing an operator to verify them before upgrading the cluster:

1

| kubectl deprecations --k8s-version=v1.27.0

|

deprecations screenshot

deprecations screenshot from official GitHub repository

- This kubectl plugin allows direct connections to AWS EKS cluster nodes managed by Systems Manager, relying on the local AWS CLI and the session-manager-plugin being installed.

Installation of the node-ssm Krew plugin:

1

| kubectl krew install node-ssm

|

Access a node using SSM:

1

2

| K8S_NODE=$(kubectl get nodes -o custom-columns=NAME:.metadata.name --no-headers | head -n 1)

kubectl node-ssm --target "${K8S_NODE}"

|

1

2

3

4

5

6

7

8

| Starting session with SessionId: ruzickap@M-C02DP163ML87-k8s-1687787750-03553ad56b6a28df6

Welcome to Bottlerocket's control container!

╱╲

╱┄┄╲ This container gives you access to the Bottlerocket API,

...

...

...

[ssm-user@control]$

|

- A faster way to switch between namespaces in kubectl.

Installation of the ns Krew plugin:

1

| kubectl krew install ns

|

Change the active namespace of the current context and list secrets from cert-manager without using the --namespace or -n option:

1

2

| kubectl ns cert-manager

kubectl get secrets

|

1

2

3

4

5

6

7

8

| Context "arn:aws:eks:us-east-1:729560437327:cluster/k01" modified.

Active namespace is "cert-manager".

NAME TYPE DATA AGE

cert-manager-webhook-ca Opaque 3 107m

ingress-cert-staging kubernetes.io/tls 2 102m

letsencrypt-staging-dns Opaque 1 106m

sh.helm.release.v1.cert-manager.v1 helm.sh/release.v1 1 107m

|

- The kubectl open-svc plugin makes services accessible via their ClusterIP from outside your cluster.

Installation of the open-svc Krew plugin:

1

| kubectl krew install open-svc

|

Open the Grafana Dashboard URL in the browser:

1

| kubectl open-svc kube-prometheus-stack-grafana -n kube-prometheus-stack

|

open-svc screenshot from official GitHub repository

- A kubectl plugin to show pod-related resources.

pod-lens logo

Installation of the pod-lens Krew plugin:

1

| kubectl krew install pod-lens

|

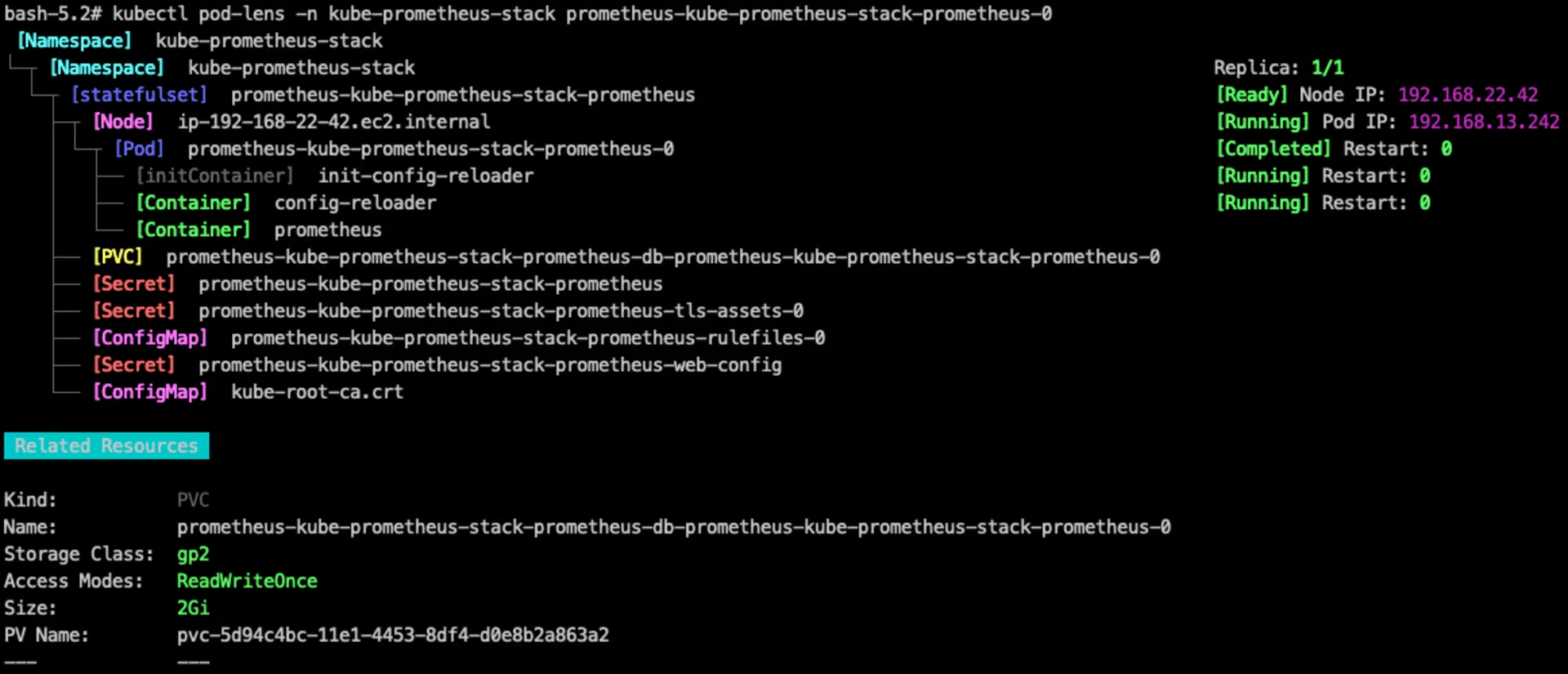

Find related workloads, namespace, node, service, configmap, secret, ingress, PVC, HPA, and PDB by pod name and display them in a tree structure:

1

| kubectl pod-lens -n kube-prometheus-stack prometheus-kube-prometheus-stack-prometheus-0

|

pod-lens showing details in kube-prometheus-stack namespace

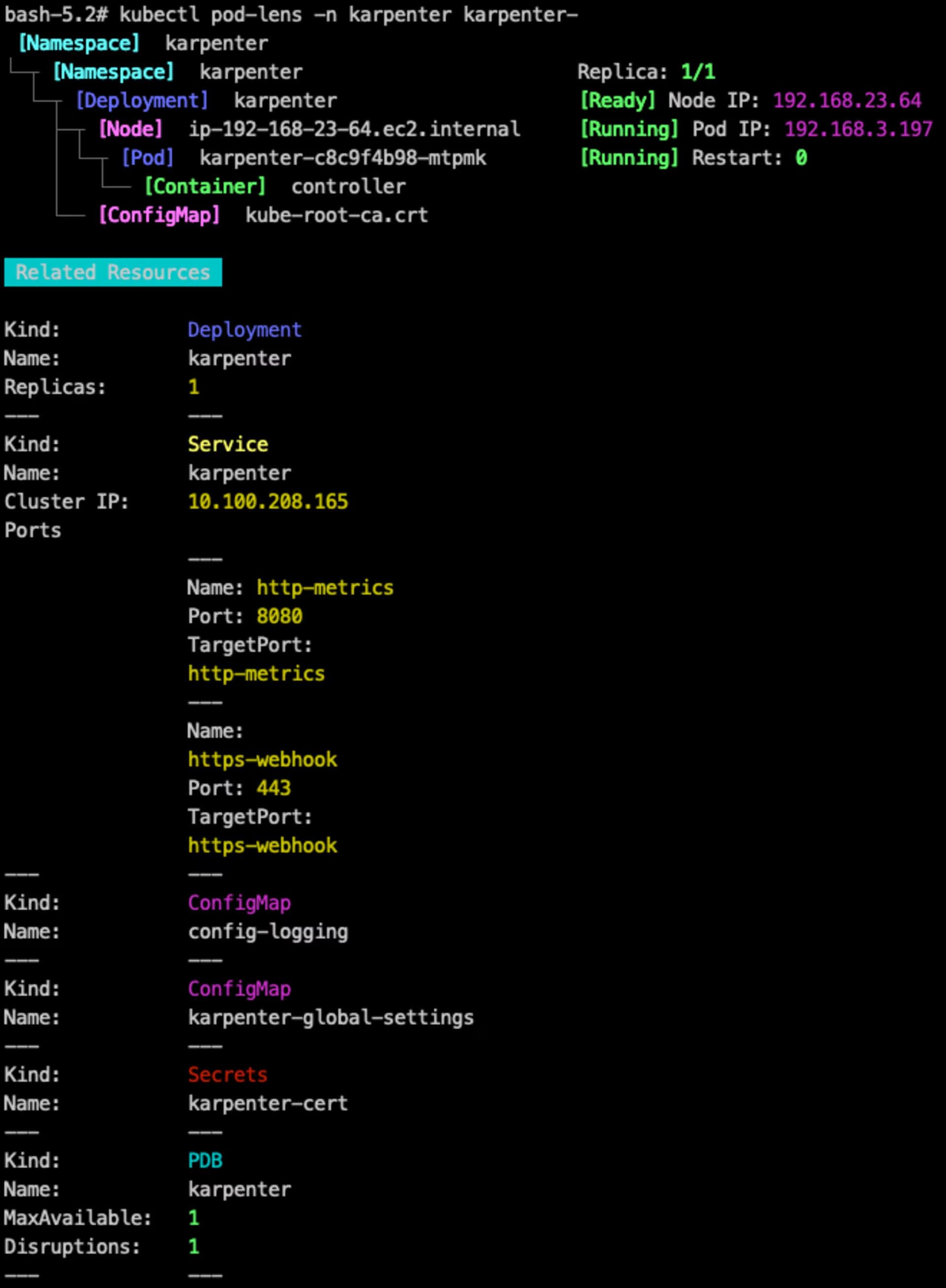

1

| kubectl pod-lens -n karpenter karpenter-

|

pod-lens showing details in karpenter namespace

Installation of the rbac-tool Krew plugin:

1

| kubectl krew install rbac-tool

|

Shows which subjects have RBAC get permissions for /apis:

1

| kubectl rbac-tool who-can get /apis

|

1

2

3

4

5

| TYPE | SUBJECT | NAMESPACE

+-------+----------------------+-----------+

Group | system:authenticated |

Group | system:masters |

User | eks:addon-manager |

|

Shows which subjects have RBAC watch permissions for deployments.apps:

1

| kubectl rbac-tool who-can watch deployments.apps

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| TYPE | SUBJECT | NAMESPACE

+----------------+------------------------------------------+-----------------------+

Group | eks:service-operations |

Group | system:masters |

ServiceAccount | deployment-controller | kube-system

ServiceAccount | disruption-controller | kube-system

ServiceAccount | eks-vpc-resource-controller | kube-system

ServiceAccount | generic-garbage-collector | kube-system

ServiceAccount | karpenter | karpenter

ServiceAccount | kube-prometheus-stack-kube-state-metrics | kube-prometheus-stack

ServiceAccount | resourcequota-controller | kube-system

User | eks:addon-manager |

User | eks:vpc-resource-controller |

User | system:kube-controller-manager |

|

Get details about the current “user”:

1

| kubectl rbac-tool whoami

|

1

2

3

4

5

6

7

8

9

| {Username: "kubernetes-admin",

UID: "aws-iam-authenticator:7xxxxxxxxxx7:AxxxxxxxxxxxxxxxxxxxL",

Groups: ["system:masters",

"system:authenticated"],

Extra: {accessKeyId: ["AxxxxxxxxxxxxxxxxxxA"],

arn: ["arn:aws:sts::7xxxxxxxxxx7:assumed-role/GitHubRole/ruzickap@mymac-k8s-1111111111"],

canonicalArn: ["arn:aws:iam::7xxxxxxxxxx7:role/GitHubRole"],

principalId: ["AxxxxxxxxxxxxxxxxxxxL"],

sessionName: ["ruzickap@mymac-k8s-1111111111"]}}

|

List the Kubernetes RBAC Roles/ClusterRoles used by a given User, ServiceAccount, or Group:

1

| kubectl rbac-tool lookup kube-prometheus

|

1

2

3

4

5

6

7

| SUBJECT | SUBJECT TYPE | SCOPE | NAMESPACE | ROLE

+------------------------------------------+----------------+-------------+-----------------------+-------------------------------------------+

kube-prometheus-stack-grafana | ServiceAccount | ClusterRole | | kube-prometheus-stack-grafana-clusterrole

kube-prometheus-stack-grafana | ServiceAccount | Role | kube-prometheus-stack | kube-prometheus-stack-grafana

kube-prometheus-stack-kube-state-metrics | ServiceAccount | ClusterRole | | kube-prometheus-stack-kube-state-metrics

kube-prometheus-stack-operator | ServiceAccount | ClusterRole | | kube-prometheus-stack-operator

kube-prometheus-stack-prometheus | ServiceAccount | ClusterRole | | kube-prometheus-stack-prometheus

|

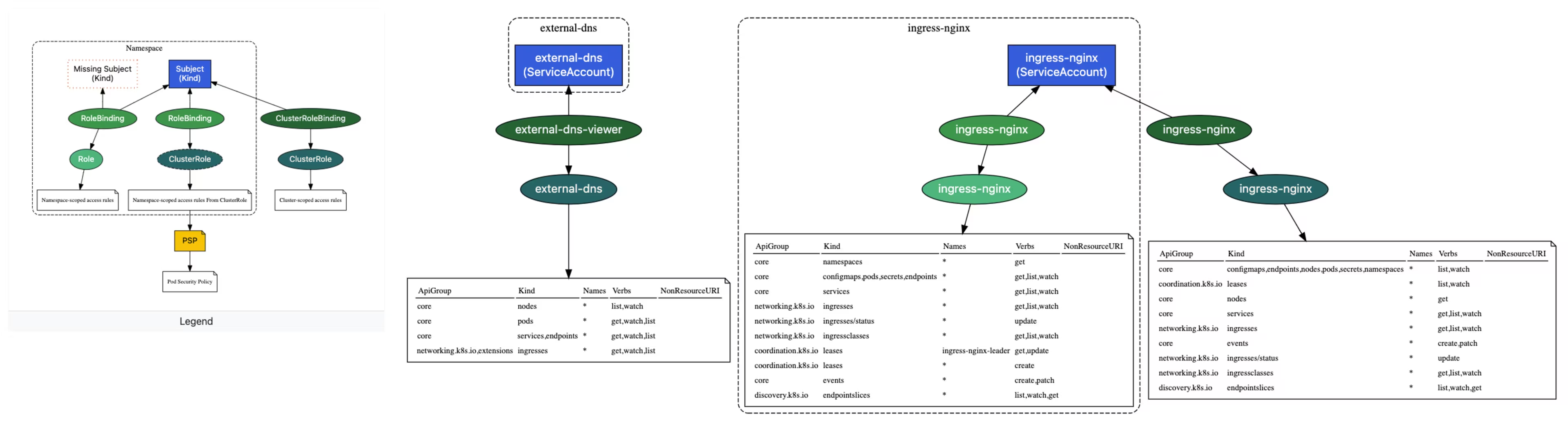

Visualize Kubernetes RBAC relationships:

1

| kubectl rbac-tool visualize --include-namespaces ingress-nginx,external-dns --outfile "${TMP_DIR}/rbac.html"

|

rbac-tool visualize

- This plugin provides an overview of resource requests, limits, and utilization in a Kubernetes cluster.

Installation of the resource-capacity Krew plugin:

1

| kubectl krew install resource-capacity

|

Display resource requests, limits, and utilization for nodes:

1

| kubectl resource-capacity --pod-count --util

|

1

2

3

4

| NODE CPU REQUESTS CPU LIMITS CPU UTIL MEMORY REQUESTS MEMORY LIMITS MEMORY UTIL POD COUNT

* 1130m (29%) 400m (10%) 135m (3%) 1250Mi (27%) 5048Mi (110%) 2423Mi (53%) 29/220

ip-192-168-26-84.ec2.internal 515m (26%) 0m (0%) 72m (3%) 590Mi (25%) 2644Mi (116%) 1320Mi (57%) 16/110

ip-192-168-7-23.ec2.internal 615m (31%) 400m (20%) 64m (3%) 660Mi (29%) 2404Mi (105%) 1103Mi (48%) 13/110

|

List resource requests, limits, and utilization for pods:

1

| kubectl resource-capacity --pods --util

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

| NODE NAMESPACE POD CPU REQUESTS CPU LIMITS CPU UTIL MEMORY REQUESTS MEMORY LIMITS MEMORY UTIL

* * * 1130m (29%) 400m (10%) 142m (3%) 1250Mi (27%) 5048Mi (110%) 2414Mi (53%)

ip-192-168-26-84.ec2.internal * * 515m (26%) 0m (0%) 79m (4%) 590Mi (25%) 2644Mi (116%) 1315Mi (57%)

ip-192-168-26-84.ec2.internal kube-system aws-node-79jc6 25m (1%) 0m (0%) 3m (0%) 0Mi (0%) 0Mi (0%) 32Mi (1%)

ip-192-168-26-84.ec2.internal kube-system aws-node-termination-handler-hj8hm 0m (0%) 0m (0%) 1m (0%) 0Mi (0%) 0Mi (0%) 12Mi (0%)

ip-192-168-26-84.ec2.internal cert-manager cert-manager-777fbdc9f8-ng8dg 0m (0%) 0m (0%) 1m (0%) 0Mi (0%) 0Mi (0%) 25Mi (1%)

ip-192-168-26-84.ec2.internal cert-manager cert-manager-cainjector-65857fccf8-krpr9 0m (0%) 0m (0%) 1m (0%) 0Mi (0%) 0Mi (0%) 22Mi (0%)

ip-192-168-26-84.ec2.internal cert-manager cert-manager-webhook-54f9d96756-plv84 0m (0%) 0m (0%) 1m (0%) 0Mi (0%) 0Mi (0%) 10Mi (0%)

ip-192-168-26-84.ec2.internal kube-system coredns-7975d6fb9b-hqmxm 100m (5%) 0m (0%) 1m (0%) 70Mi (3%) 170Mi (7%) 16Mi (0%)

ip-192-168-26-84.ec2.internal kube-system coredns-7975d6fb9b-jhzkw 100m (5%) 0m (0%) 2m (0%) 70Mi (3%) 170Mi (7%) 15Mi (0%)

ip-192-168-26-84.ec2.internal kube-system ebs-csi-controller-8cc6766cf-nsk5r 60m (3%) 0m (0%) 3m (0%) 240Mi (10%) 1536Mi (67%) 61Mi (2%)

ip-192-168-26-84.ec2.internal kube-system ebs-csi-node-mct6d 30m (1%) 0m (0%) 1m (0%) 120Mi (5%) 768Mi (33%) 22Mi (0%)

ip-192-168-26-84.ec2.internal ingress-nginx ingress-nginx-controller-9d7cf6ffb-xcw5t 100m (5%) 0m (0%) 1m (0%) 90Mi (3%) 0Mi (0%) 84Mi (3%)

ip-192-168-26-84.ec2.internal karpenter karpenter-6bd66c788f-xnc4s 0m (0%) 0m (0%) 11m (0%) 0Mi (0%) 0Mi (0%) 146Mi (6%)

ip-192-168-26-84.ec2.internal kube-prometheus-stack kube-prometheus-stack-grafana-896f8645-6q9lb 0m (0%) 0m (0%) 8m (0%) 0Mi (0%) 0Mi (0%) 229Mi (10%)

ip-192-168-26-84.ec2.internal kube-prometheus-stack kube-prometheus-stack-prometheus-node-exporter-m4k5m 0m (0%) 0m (0%) 3m (0%) 0Mi (0%) 0Mi (0%) 10Mi (0%)

ip-192-168-26-84.ec2.internal kube-system kube-proxy-6rfnc 100m (5%) 0m (0%) 1m (0%) 0Mi (0%) 0Mi (0%) 12Mi (0%)

ip-192-168-26-84.ec2.internal kube-system metrics-server-57bd7b96f9-nllnn 0m (0%) 0m (0%) 3m (0%) 0Mi (0%) 0Mi (0%) 20Mi (0%)

ip-192-168-26-84.ec2.internal oauth2-proxy oauth2-proxy-87bd47488-v97kg 0m (0%) 0m (0%) 1m (0%) 0Mi (0%) 0Mi (0%) 8Mi (0%)

ip-192-168-7-23.ec2.internal * * 615m (31%) 400m (20%) 64m (3%) 660Mi (29%) 2404Mi (105%) 1099Mi (48%)

ip-192-168-7-23.ec2.internal kube-prometheus-stack alertmanager-kube-prometheus-stack-alertmanager-0 200m (10%) 200m (10%) 1m (0%) 250Mi (10%) 50Mi (2%) 20Mi (0%)

ip-192-168-7-23.ec2.internal kube-system aws-node-bg2hc 25m (1%) 0m (0%) 2m (0%) 0Mi (0%) 0Mi (0%) 34Mi (1%)

ip-192-168-7-23.ec2.internal kube-system aws-node-termination-handler-s66vl 0m (0%) 0m (0%) 1m (0%) 0Mi (0%) 0Mi (0%) 12Mi (0%)

ip-192-168-7-23.ec2.internal kube-system ebs-csi-controller-8cc6766cf-6v668 60m (3%) 0m (0%) 2m (0%) 240Mi (10%) 1536Mi (67%) 55Mi (2%)

ip-192-168-7-23.ec2.internal kube-system ebs-csi-node-zx7bk 30m (1%) 0m (0%) 1m (0%) 120Mi (5%) 768Mi (33%) 21Mi (0%)

ip-192-168-7-23.ec2.internal external-dns external-dns-7fdb8769ff-dxpdn 0m (0%) 0m (0%) 1m (0%) 0Mi (0%) 0Mi (0%) 21Mi (0%)

ip-192-168-7-23.ec2.internal forecastle forecastle-58d7ccb8f8-hlsf5 0m (0%) 0m (0%) 1m (0%) 0Mi (0%) 0Mi (0%) 5Mi (0%)

ip-192-168-7-23.ec2.internal kube-prometheus-stack kube-prometheus-stack-kube-state-metrics-669bd5c594-vfznb 0m (0%) 0m (0%) 2m (0%) 0Mi (0%) 0Mi (0%) 13Mi (0%)

ip-192-168-7-23.ec2.internal kube-prometheus-stack kube-prometheus-stack-operator-7f45586f68-9rz6j 0m (0%) 0m (0%) 1m (0%) 0Mi (0%) 0Mi (0%) 24Mi (1%)

ip-192-168-7-23.ec2.internal kube-prometheus-stack kube-prometheus-stack-prometheus-node-exporter-x5bhm 0m (0%) 0m (0%) 2m (0%) 0Mi (0%) 0Mi (0%) 10Mi (0%)

ip-192-168-7-23.ec2.internal kube-system kube-proxy-gzqct 100m (5%) 0m (0%) 1m (0%) 0Mi (0%) 0Mi (0%) 13Mi (0%)

ip-192-168-7-23.ec2.internal mailhog mailhog-6f54fccf85-dgbp2 0m (0%) 0m (0%) 1m (0%) 0Mi (0%) 0Mi (0%) 4Mi (0%)

ip-192-168-7-23.ec2.internal kube-prometheus-stack prometheus-kube-prometheus-stack-prometheus-0 200m (10%) 200m (10%) 25m (1%) 50Mi (2%) 50Mi (2%) 415Mi (18%)

|

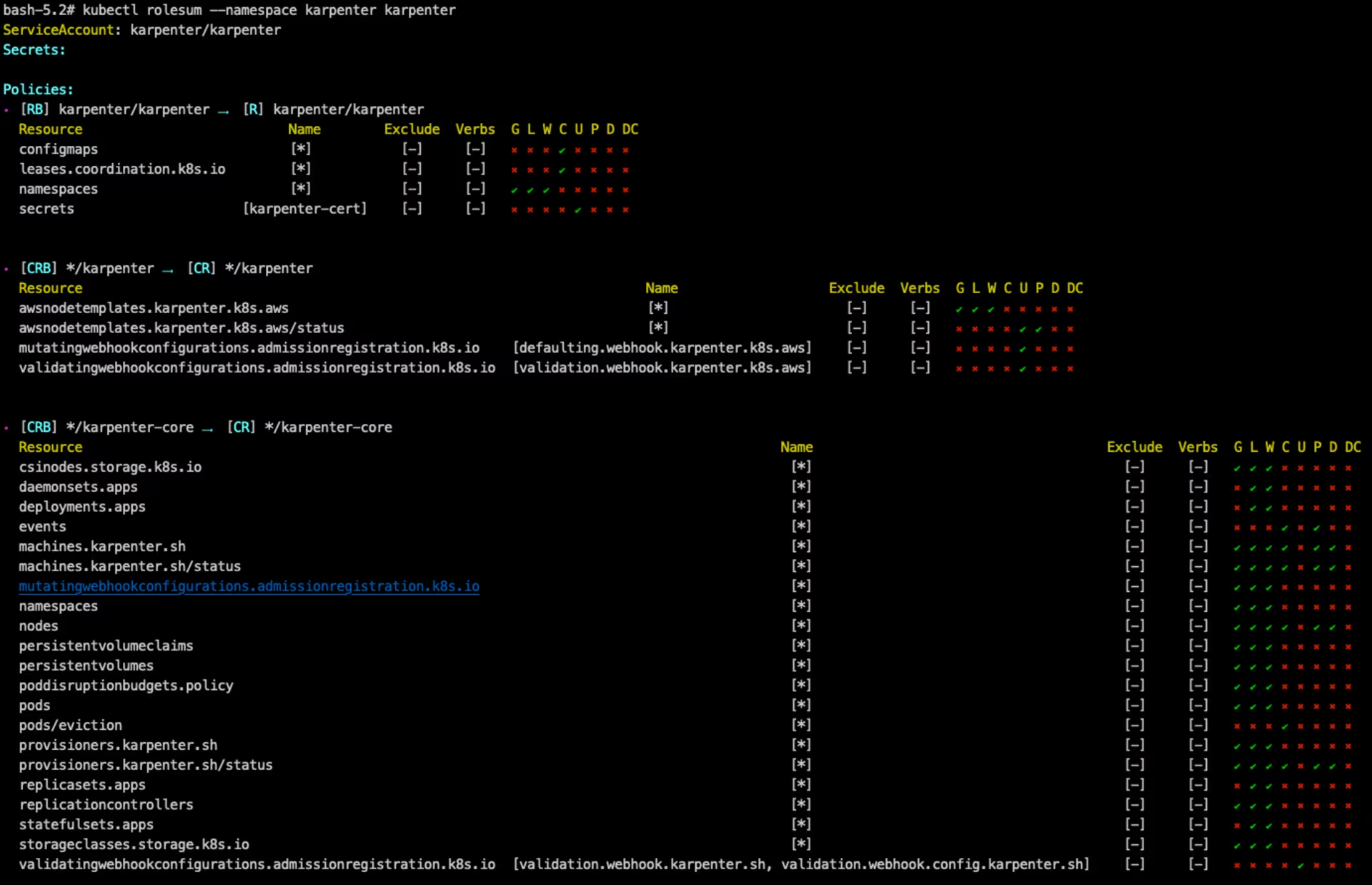

- This plugin summarizes Kubernetes RBAC roles for specified subjects.

Installation of the rbac-tool Krew plugin:

1

| kubectl krew install rolesum

|

Show details for the karpenter ServiceAccount:

1

| kubectl rolesum --namespace karpenter karpenter

|

rolesum screenshot

- A tool for multi-pod and multi-container log tailing in Kubernetes.

Installation of the stern Krew plugin:

1

| kubectl krew install stern

|

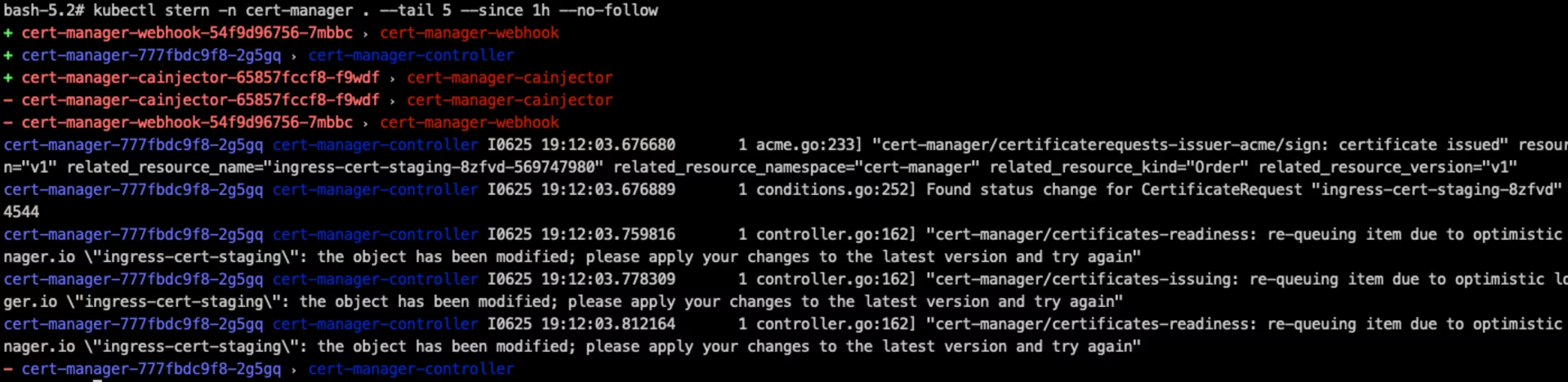

Check logs for all pods in the cert-manager namespace from the past hour:

1

| kubectl stern -n cert-manager . --tail 5 --since 1h --no-follow

|

stern screenshot

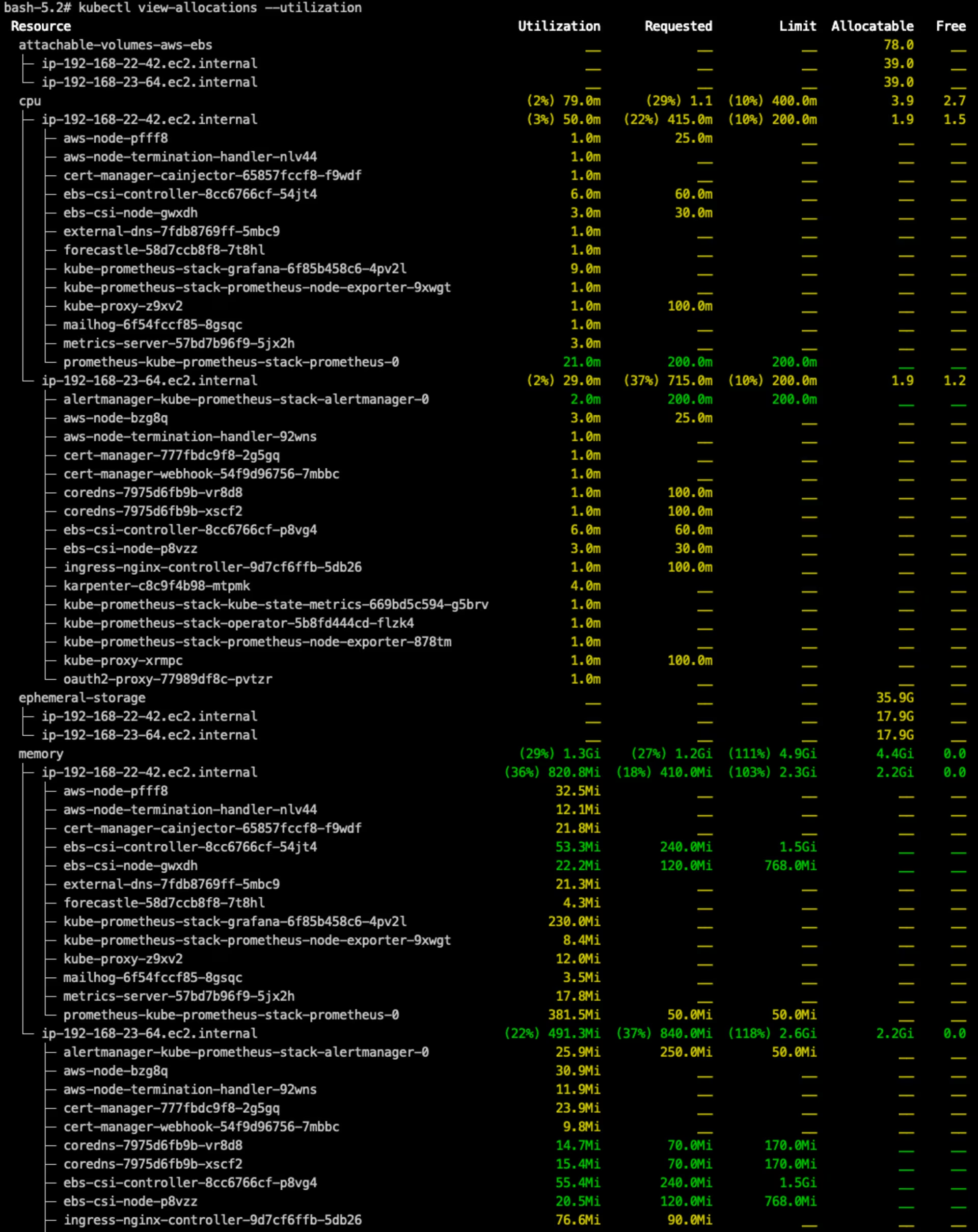

- This kubectl plugin lists resource allocations (CPU, memory, GPU, etc.) as defined in the manifests of nodes and running pods.

Installation of the view-allocations Krew plugin:

1

| kubectl krew install view-allocations

|

1

| kubectl view-allocations --utilization

|

view-allocations screenshot

- Viewnode displays Kubernetes cluster nodes along with their pods and containers.

Installation of the viewnode Krew plugin:

1

| kubectl krew install viewnode

|

1

| kubectl viewnode --all-namespaces --show-metrics

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

| 29 pod(s) in total

0 unscheduled pod(s)

2 running node(s) with 29 scheduled pod(s):

- ip-192-168-19-143.ec2.internal running 16 pod(s) (linux/arm64/containerd://1.6.20+bottlerocket | mem: 1.3 GiB)

* cert-manager: cert-manager-777fbdc9f8-qhk2d (running | mem usage: 24.8 MiB)

* cert-manager: cert-manager-cainjector-65857fccf8-t68lk (running | mem usage: 22.4 MiB)

* cert-manager: cert-manager-webhook-54f9d96756-8nbqx (running | mem usage: 9.4 MiB)

* ingress-nginx: ingress-nginx-controller-9d7cf6ffb-vtjhx (running | mem usage: 74.3 MiB)

* karpenter: karpenter-79455db76f-79q7h (running | mem usage: 164.2 MiB)

* kube-prometheus-stack: kube-prometheus-stack-grafana-896f8645-972n2 (running | mem usage: 232.2 MiB)

* kube-prometheus-stack: kube-prometheus-stack-prometheus-node-exporter-rw9kh (running | mem usage: 8.0 MiB)

* kube-system: aws-node-gfn9v (running | mem usage: 30.8 MiB)

* kube-system: aws-node-termination-handler-fhcmv (running | mem usage: 11.9 MiB)

* kube-system: coredns-7975d6fb9b-29885 (running | mem usage: 14.8 MiB)

* kube-system: coredns-7975d6fb9b-mrfws (running | mem usage: 14.6 MiB)

* kube-system: ebs-csi-controller-8cc6766cf-x5mb9 (running | mem usage: 55.3 MiB)

* kube-system: ebs-csi-node-xtqww (running | mem usage: 21.2 MiB)

* kube-system: kube-proxy-c97d8 (running | mem usage: 11.9 MiB)

* kube-system: metrics-server-57bd7b96f9-mqnqq (running | mem usage: 17.7 MiB)

* oauth2-proxy: oauth2-proxy-66b84b895c-8hv8d (running | mem usage: 6.8 MiB)

- ip-192-168-3-70.ec2.internal running 13 pod(s) (linux/arm64/containerd://1.6.20+bottlerocket | mem: 940.6 MiB)

* external-dns: external-dns-7fdb8769ff-hjsxr (running | mem usage: 19.6 MiB)

* forecastle: forecastle-58d7ccb8f8-l9dfs (running | mem usage: 4.3 MiB)

* kube-prometheus-stack: alertmanager-kube-prometheus-stack-alertmanager-0 (running | mem usage: 18.2 MiB)

* kube-prometheus-stack: kube-prometheus-stack-kube-state-metrics-669bd5c594-jqcjb (running | mem usage: 12.2 MiB)

* kube-prometheus-stack: kube-prometheus-stack-operator-7f45586f68-jfzhb (running | mem usage: 22.8 MiB)

* kube-prometheus-stack: kube-prometheus-stack-prometheus-node-exporter-g7t7l (running | mem usage: 8.5 MiB)

* kube-prometheus-stack: prometheus-kube-prometheus-stack-prometheus-0 (running | mem usage: 328.1 MiB)

* kube-system: aws-node-termination-handler-hrjv8 (running | mem usage: 11.9 MiB)

* kube-system: aws-node-vsr54 (running | mem usage: 30.8 MiB)

* kube-system: ebs-csi-controller-8cc6766cf-69plv (running | mem usage: 53.0 MiB)

* kube-system: ebs-csi-node-j6p6d (running | mem usage: 21.5 MiB)

* kube-system: kube-proxy-d6wqx (running | mem usage: 10.5 MiB)

* mailhog: mailhog-6f54fccf85-6s7bt (running | mem usage: 3.4 MiB)

|

Show various details for the kube-prometheus-stack namespace:

1

| kubectl viewnode -n kube-prometheus-stack --container-block-view --show-containers --show-metrics --show-pod-start-times --show-requests-and-limits

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| 7 pod(s) in total

0 unscheduled pod(s)

2 running node(s) with 7 scheduled pod(s):

- ip-192-168-19-143.ec2.internal running 2 pod(s) (linux/arm64/containerd://1.6.20+bottlerocket | mem: 1.3 GiB)

* kube-prometheus-stack-grafana-896f8645-972n2 (running/Sat Jun 24 11:34:12 UTC 2023 | mem usage: 229.3 MiB) 3 container/s:

0: grafana (running) [cpu: - | mem: - | mem usage: 86.7 MiB]

1: grafana-sc-dashboard (running) [cpu: - | mem: - | mem usage: 70.6 MiB]

2: grafana-sc-datasources (running) [cpu: - | mem: - | mem usage: 72.0 MiB]

* kube-prometheus-stack-prometheus-node-exporter-rw9kh (running/Sat Jun 24 11:34:12 UTC 2023 | mem usage: 8.2 MiB) 1 container/s:

0: node-exporter (running) [cpu: - | mem: - | mem usage: 8.2 MiB]

- ip-192-168-3-70.ec2.internal running 5 pod(s) (linux/arm64/containerd://1.6.20+bottlerocket | mem: 942.2 MiB)

* alertmanager-kube-prometheus-stack-alertmanager-0 (running/Sat Jun 24 11:34:15 UTC 2023 | mem usage: 18.2 MiB) 2 container/s:

0: alertmanager (running) [cpu: - | mem: 200Mi<- | mem usage: 15.6 MiB]

1: config-reloader (running) [cpu: 200m<200m | mem: 50Mi<50Mi | mem usage: 2.7 MiB]

* kube-prometheus-stack-kube-state-metrics-669bd5c594-jqcjb (running/Sat Jun 24 11:34:12 UTC 2023 | mem usage: 12.2 MiB) 1 container/s:

0: kube-state-metrics (running) [cpu: - | mem: - | mem usage: 12.2 MiB]

* kube-prometheus-stack-operator-7f45586f68-jfzhb (running/Sat Jun 24 11:34:12 UTC 2023 | mem usage: 22.5 MiB) 1 container/s:

0: kube-prometheus-stack (running) [cpu: - | mem: - | mem usage: 22.5 MiB]

* kube-prometheus-stack-prometheus-node-exporter-g7t7l (running/Sat Jun 24 11:34:12 UTC 2023 | mem usage: 8.7 MiB) 1 container/s:

0: node-exporter (running) [cpu: - | mem: - | mem usage: 8.7 MiB]

* prometheus-kube-prometheus-stack-prometheus-0 (running/Sat Jun 24 11:34:20 UTC 2023 | mem usage: 328.1 MiB) 2 container/s:

0: config-reloader (running) [cpu: 200m<200m | mem: 50Mi<50Mi | mem usage: 6.0 MiB]

1: prometheus (running) [cpu: - | mem: - | mem usage: 322.0 MiB]

|

There are a few other kubectl Krew plugins that I have looked at but am not currently using: aks, view-cert, cost, cyclonus, graph, ingress-nginx node-shell, nodepools, np-viewer, oomd, permissions, popeye, pv-migrate, score, ssh-jump, tree, unlimited, whoami

Clean-up

Remove files from the ${TMP_DIR} directory:

1

2

3

4

5

6

7

| for FILE in "${TMP_DIR}"/{krew-linux_amd64,rbac.html}; do

if [[ -f "${FILE}" ]]; then

rm -v "${FILE}"

else

echo "*** File not found: ${FILE}"

fi

done

|

Enjoy … 😉

deprecations screenshot from official GitHub repository

deprecations screenshot from official GitHub repository open-svc screenshot from official GitHub repository

open-svc screenshot from official GitHub repository pod-lens showing details in kube-prometheus-stack namespace

pod-lens showing details in kube-prometheus-stack namespace pod-lens showing details in karpenter namespace

pod-lens showing details in karpenter namespace